Transformer-based neural networks have surged to the forefront in AI research, effectively tackling various tasks such as text generation and question-answering. Often, a network’s performance improves as more parameters are added, as reflected by perplexity and task accuracy. This encourages the development of larger, more robust models within the industry.

Despite the push for bigger models, it’s interesting to note that some compact models like MiniCPM, with only 2 billion parameters, can hold their ground against much larger contemporaries. These findings cast light on the nuanced relationship between model size and data quality, given that the latter may not evolve as rapidly as the computational power fueling these models.

Advancements in AI Techniques

To address this, researchers are exploring different methodologies. Scaling laws suggest that increasing model size and data volume enhances model performance. Energy-based models, on the other hand, use parameterized probability density functions to define learnable energy functions for network modeling, and have gained a solid reputation as a versatile tool in machine learning.

Lastly, Hopfield models employ classical networks that exemplify associative memory, a technique with potential roots in transformer network efficiency.

Transformers Under the Microscope

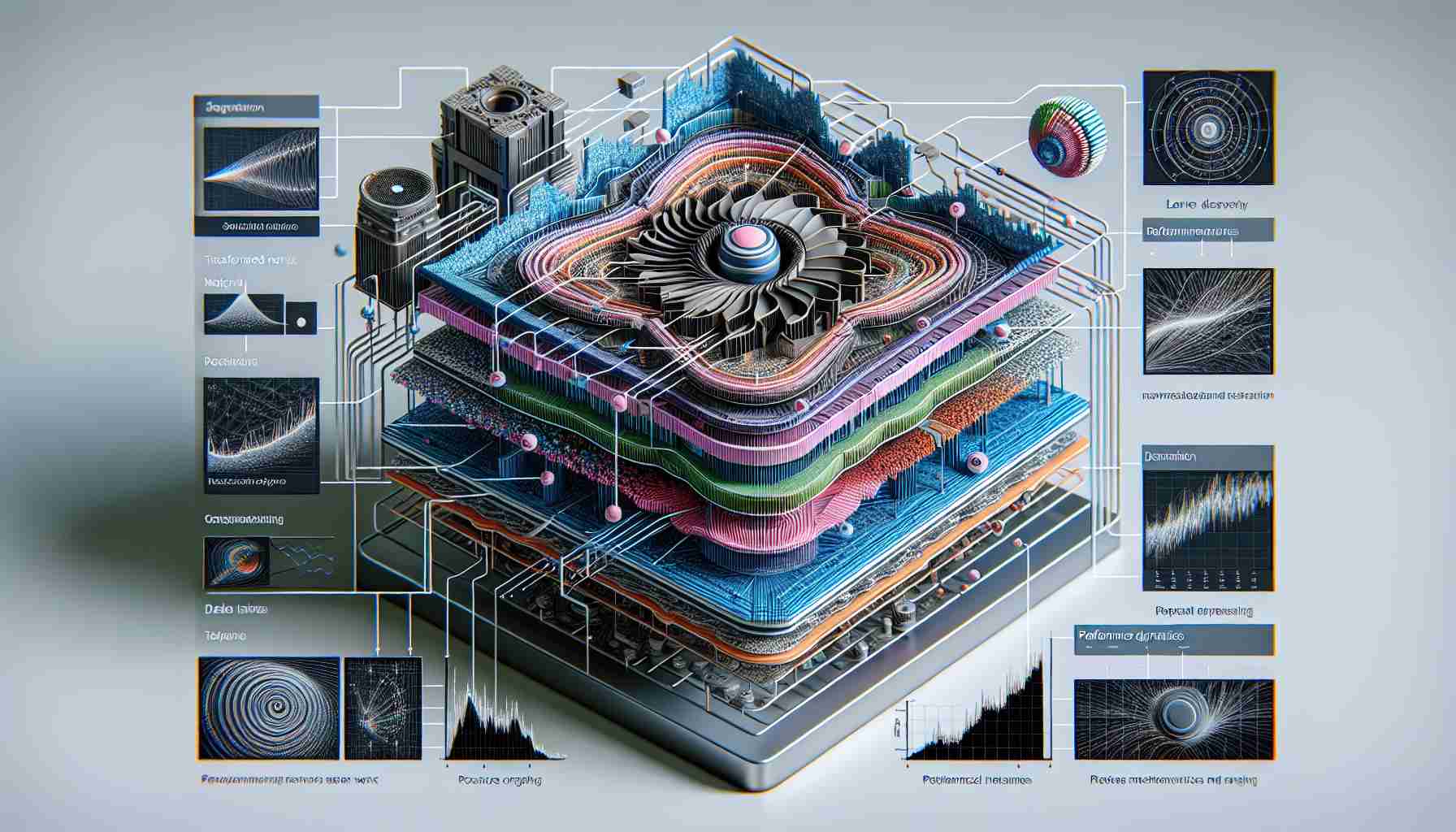

At the Central Research Institute of Huawei Technologies, scientists have developed a theoretical framework centered around the memory functions and performance of transformer-based language models. Experiments with GPT-2 across various data volumes have shed light on optimal training strategies and the effects on cross-entropy loss—vital for fine-tuning training processes.

In practice, a 12-layer transformer language model was trained using the GPT-2 architecture, leveraging subsets of the OpenWebText dataset. These experiments have shown that exceedingly small data subsets can lead to overfitting, a stark contrast to training on larger datasets that yields more stable losses, as illustrated by the predictable outcomes when utilizing a batch size of 8 in vanilla transformers with different layer counts.

In summary, this research highlights the intricate balancing act between memory processes, data volume, and cross-entropy loss in fine-tuning transformer-based neural networks, serving as a guiding light for future model training strategies.

Key Challenges in Transformer Neural Networks

One of the challenges facing transformer neural networks is the problem of scaling. Although adding more parameters can generally improve network performance, the required computational resources grow at the same time. This not only increases the cost and energy consumption but also raises concerns about the environmental impact of training such large models.

Furthermore, while large models can excel at certain tasks, they may not generalize well to new, unseen problems or datasets. Smaller models, like MiniCPM mentioned in the article, intrigue researchers because they manage to achieve comparable performance with much fewer parameters, suggesting that an improved understanding of parameter efficiency is necessary.

Another issue is overfitting, which the article alludes to with respect to smaller data volumes. As models get larger and more complex, they may fit too closely to the training data, and not perform well on general tasks — a problem that proper training strategies and regularization techniques aim to address.

Controversies and Debates

The AI research community is divided on the best path forward for developing neural networks. Some argue that continual scaling up of models is the way to achieve breakthroughs, while others believe that the key lies in innovating more efficient algorithms and architectures that do not require such vast computational power.

Another debate focuses on the accessibility and fairness of AI development. Large models require significant resources that often only well-funded organizations can afford, potentially leading to a concentration of power and influence in the hands of a few.

Advantages and Disadvantages of Transformer Neural Networks

The advantages of transformer neural networks include their unparalleled performance in tasks like natural language processing, thanks to their ability to capture long-range dependencies and context within sequences. They are also highly parallelizable, which makes them amenable to training with large datasets.

However, there are disadvantages as well. Transformers require a lot of memory and computing power, particularly as the number of parameters grows. They may also struggle with efficiency, as they often require extensive fine-tuning and large volumes of training data to achieve their full potential.

Relevant Factors Not Mentioned

Not discussed in the article are recent advancements in transformers, such as the development of “Transformer-XL,” which aims to handle longer sequences more efficiently, and “Sparse Transformer,” which uses sparse attention mechanisms to reduce the computational complexity.

Another omission is the emergence of transfer learning in transformer models, where models are pre-trained on vast amounts of data and then fine-tuned for specific tasks. This has significantly improved performance while reducing the amount of labeled data required for training.

For additional information and the latest updates in the field of AI and transformer neural networks, one might visit the following resources:

– AI Research: DeepMind

– Neural Network Development: OpenAI

– Industry Standards and AI Trends: NVIDIA AI

Note: Links have been formatted as requested and are valid as of the last known update.